Train the model to convert lifted NL into lifte STL

Temporal Logic (TL) can be used to rigorously specify complex high-level specification for systems in many engineering applications. The translation between natural language (NL) and TL has been under-explored due to the lack of dataset and generalizable model across different application domains.

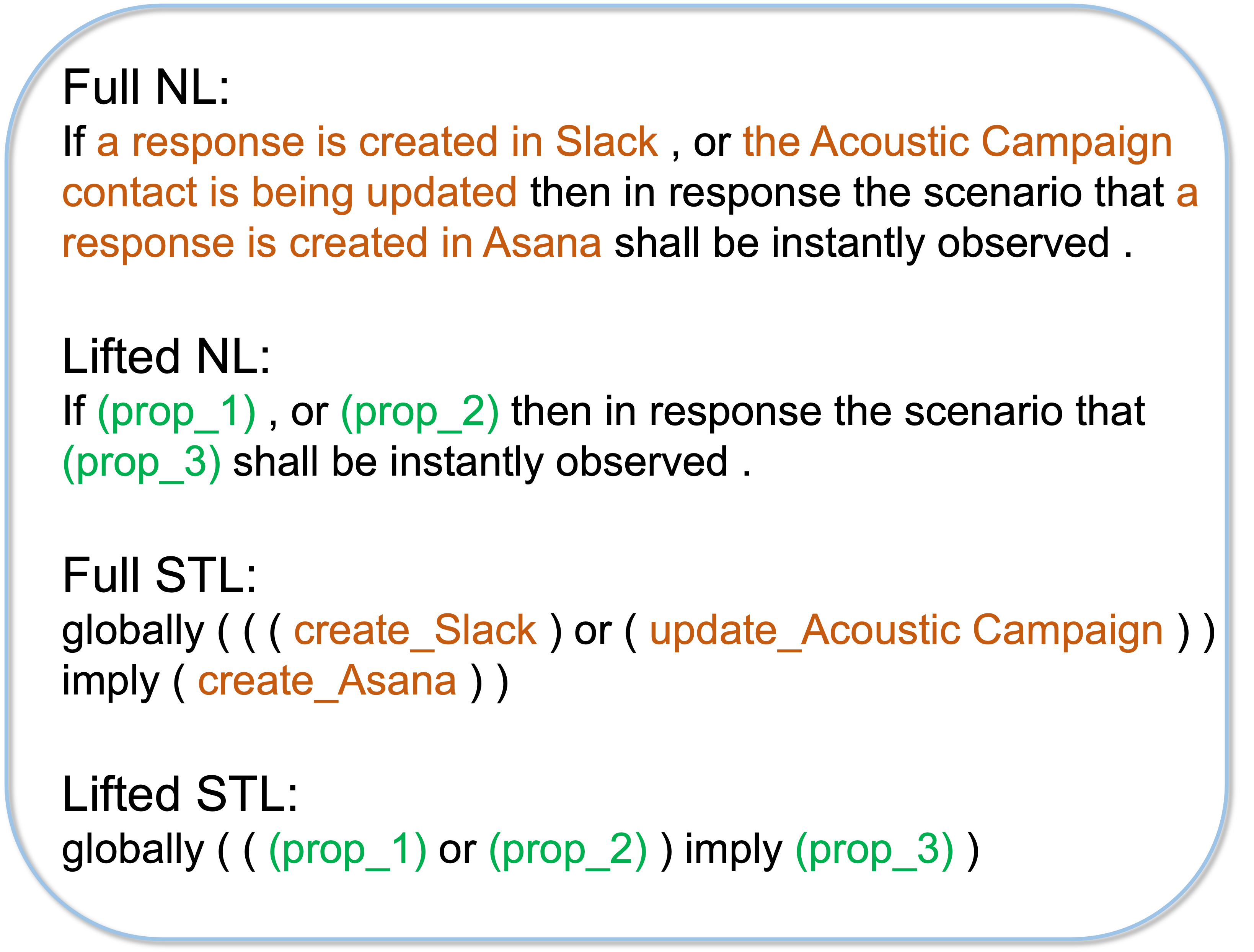

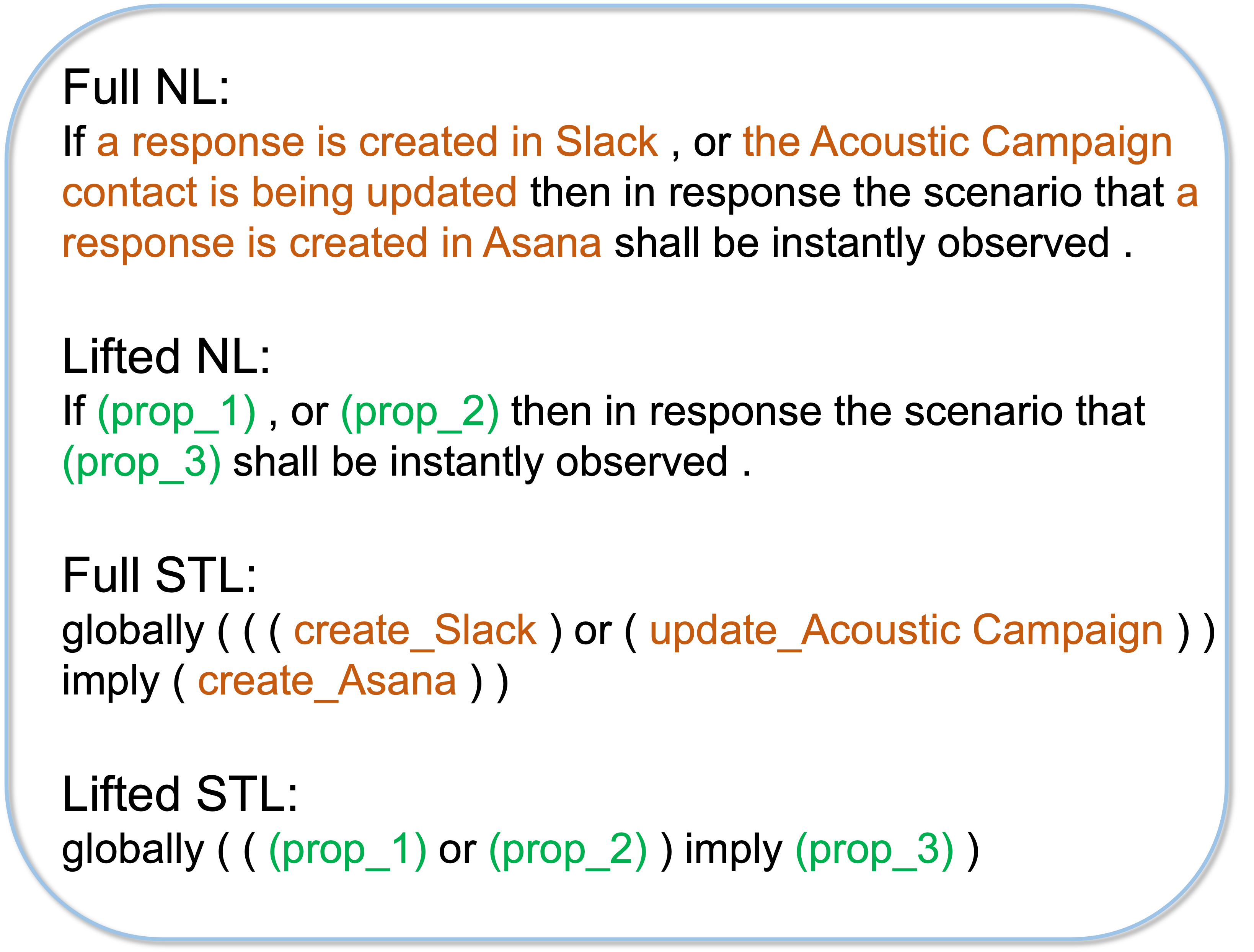

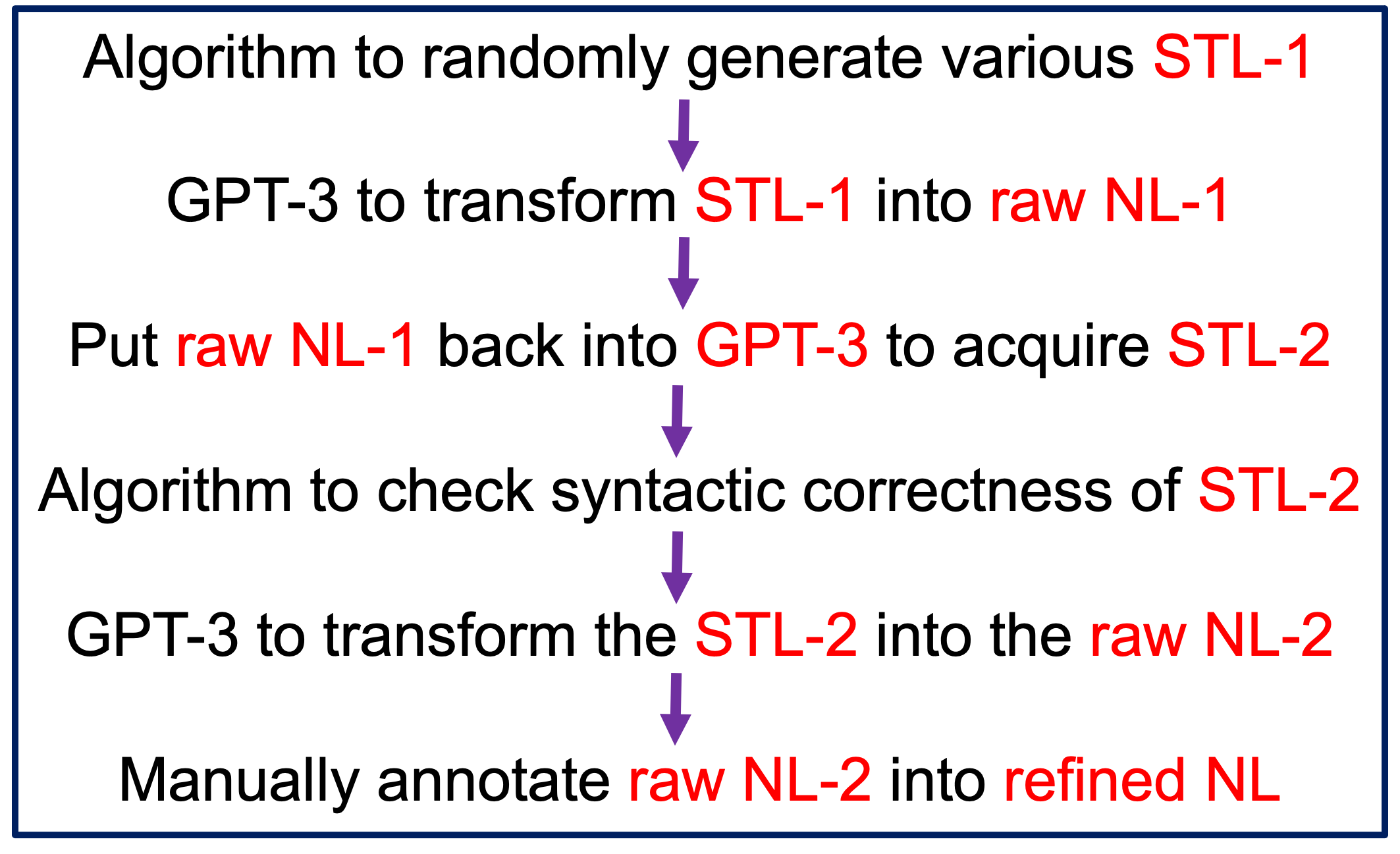

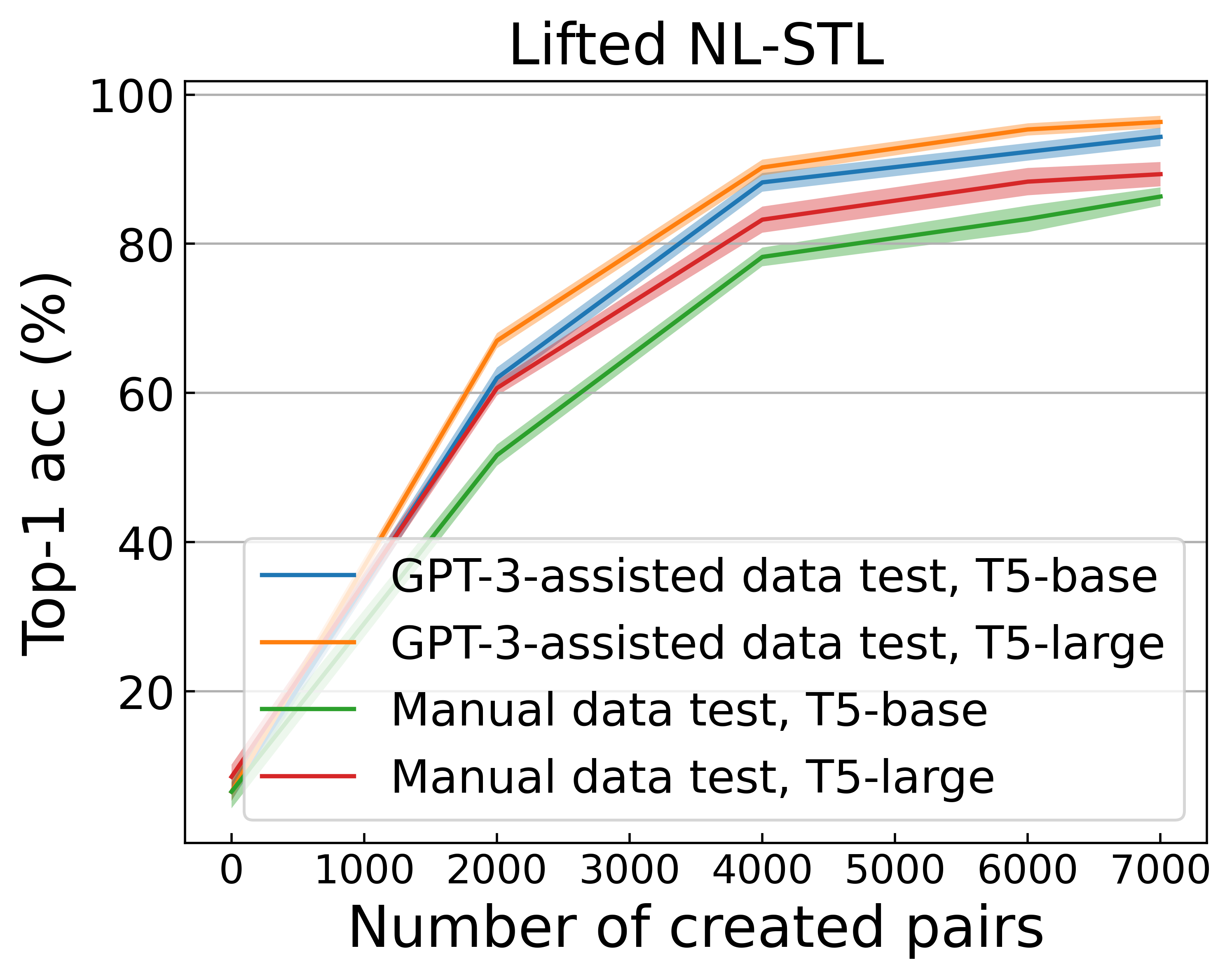

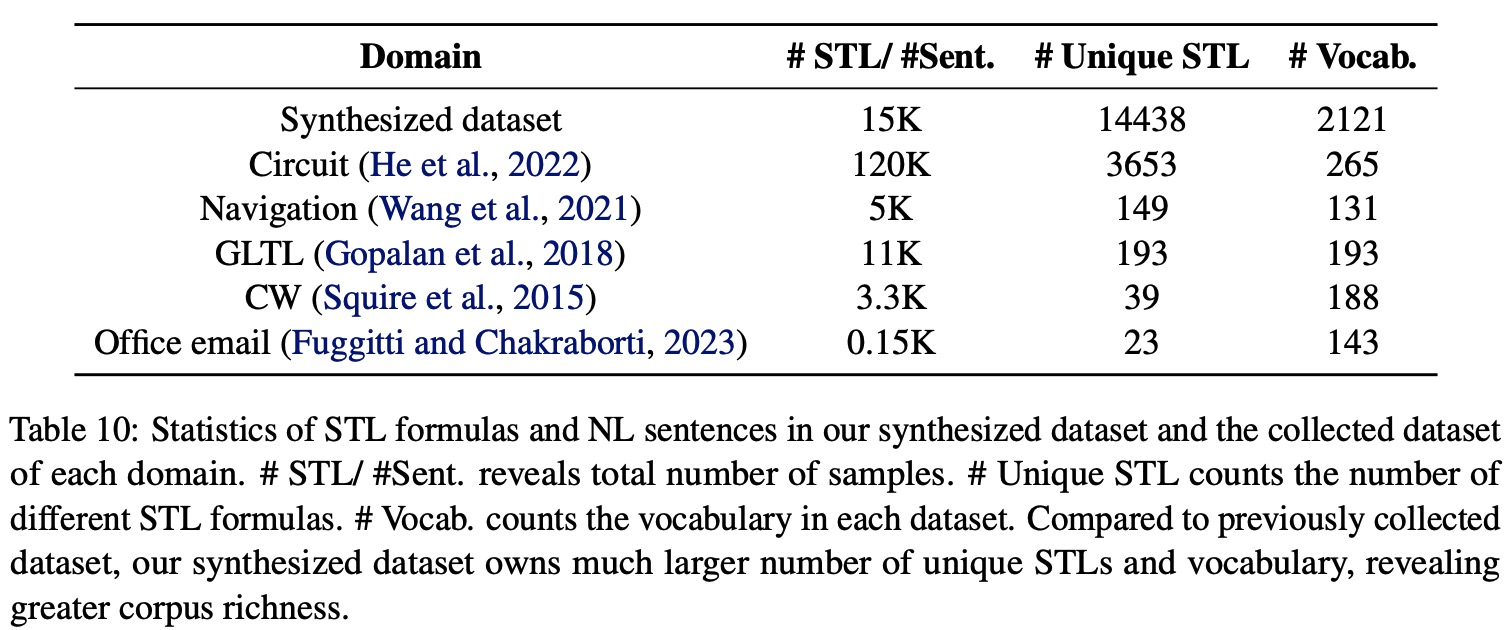

We propose an accurate and generalizable transformation framework of English instructions from NL to TL, exploring the use of Large Language Models (LLMs) at multiple stages. Our contributions are twofold. First, we develop a framework to create a dataset of NL-TL pairs combining LLMs and human annotation. We publish a dataset with 28K NL-TL pairs. Then, we finetune T5 models on the lifted versions (i.e., the specific Atomic Propositions (AP) are hidden) of the NL and TL. The enhanced generalizability originates from two aspects: 1) Usage of lifted NL-TL characterizes common logical structures, without constraints of specific domains. 2) Application of LLMs in dataset creation largely enhances corpus richness.

This work is part of a broader research thread around

Other work on STL-based motion planning from our lab include:

@article{chen2023nl2tl,

title={NL2TL: Transforming Natural Languages to Temporal Logics using Large Language Models},

author={Chen, Yongchao and Gandhi, Rujul and Zhang, Yang and Fan, Chuchu},

journal={arXiv preprint arXiv:2305.07766},

year={2023}

}

}